The Cost of Agentic Coding

Don’t ask yourself “What if my high performing engineers spent $2k/month on agentic coding?” …ask yourself why they (and others) aren’t - and what opportunities they’re missing as a result. ...

Don’t ask yourself “What if my high performing engineers spent $2k/month on agentic coding?” …ask yourself why they (and others) aren’t - and what opportunities they’re missing as a result. ...

Every technological revolution has triggered waves of anxiety about the obsolescence of human skills and professions. The current fears that AI will replace artists, eliminate writing jobs, render illustrators obsolete, and devalue creative work follow a well-established historical pattern that’s worth examining critically. The Democratisation Paradox When photography emerged in the 19th century, painters predicted the death of portraiture. When home cameras became accessible, professional photographers feared obsolescence. When smartphones put cameras in everyone’s pockets, the same concerns resurfaced 1 2 . Yet professional photography hasn’t vanished—it’s evolved. What actually occurred was a democratisation of image creation, while simultaneously elevating the appreciation for truly skilled work 3 . ...

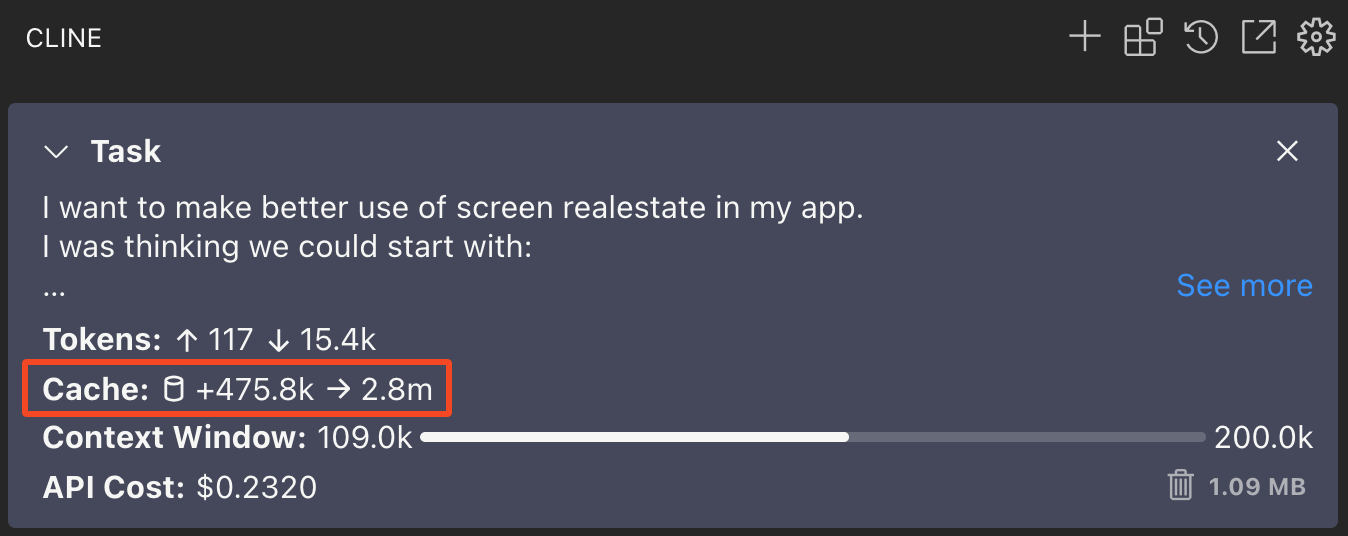

Prompt caching is a feature that Anthropic first offered on their API in 2024. It adds a cache for the tokens used Why it matters Without prompt caching every token in and out of the API must be processed and paid for in full. This is bad for your wallet, bad for the LLM hosting providers bottom line and bad for the environment. This is especially important when it comes to Agentic coding, where there are a lot of tokens in/out and important - a lot of token reuse, which makes it a perfect use case for prompt caching. ...

Apologies for the video quality, Google Meet/Hangouts records in very low resolution and bitrate. Links mentioned in the video: Cline Roo Code (Cline fork with some experimental features) MCP https://modelcontextprotocol.io/introduction The package-version MCP server I created: https://github.com/sammcj/mcp-package-version https://smithery.ai (index of MCP servers) https://mcp.so(index of MCP servers) https://glama.ai/mcp/servers (index of MCP servers)

Explaining the concept of K/V context cache quantisation, why it matters and the journey to integrate it into Ollama. Why K/V Context Cache Quantisation Matters The introduction of K/V context cache quantisation in Ollama is significant, offering users a range of benefits: • Run Larger Models: With reduced VRAM demands, users can now run larger, more powerful models on their existing hardware. • Expand Context Sizes: Larger context sizes allow LLMs to consider more information, leading to potentially more comprehensive and nuanced responses. For tasks like coding, where longer context windows are beneficial, K/V quantisation can be a game-changer. • Reduce Hardware Utilisation: Freeing up memory or allowing users to run LLMs closer to the limits of their hardware. Running the K/V context cache at Q8_0 quantisation effectively halves the VRAM required for the context compared to the default F16 with minimal quality impact on the generated outputs, while Q4_0 cuts it down to just one third (at the cost of some noticeable quality reduction). ...

TLDR; Maybe. Longer answer: Capitalism sucks, hard. It’s the bad card we’ve been dealt, for-profit companies will always be looking to reduce costs and increase profits. If this means they can reduce expenses by automating an activity - they will (eventually). To look at this another way - if the output of your job is repeatable and not creative - one could argue that (other than providing you income), it might also not be the best use of your time. ...

The two tools I use with AI / LLMs for generating diagrams are Mermaid and Excalidraw. Mermaid (or MermaidJS) is a popular diagramming library and format supported by many tools and is often rendered inside markdown (e.g. in a readme.md). Excalidraw is an excellent, free and open source diagramming and visualisation tool. I also often make use of a third party Obsidian plugin for Excalidraw. Excalidraw It has a ‘generate diagram with AI’ feature which if you’re using the excalidraw.com online editor offers a few free generations each day (I think this uses a low-end OpenAI model). If you’re running Excalidraw locally or by using the brilliant Obsidian plugin - you can provide any OpenAI compatible API endpoint and model for AI generations. Behind the scenes Excalidraw AI generates and then renders MermaidJS. ...

Ingest is a tool I’ve written to make my life easier when preparing content for LLMs. It parses directories of plain text files, such as source code, documentation etc… into a single markdown file suitable for ingestion by AI/LLMs. Ingest can also estimate vRAM requirements for a given model, quantisation and context length: Features Traverse directory structures and generate a tree view Include/exclude files based on glob patterns Estimate vRAM requirements and check model compatibility using another package I’ve created called quantest Parse output directly to LLMs such as Ollama or any OpenAI compatible API for processing Generate and include git diffs and logs Count approximate tokens for LLM compatibility Customisable output templates Copy output to clipboard (when available) Export to file or print to console Optional JSON output Ingest Intro (“Podcast” Episode): ...

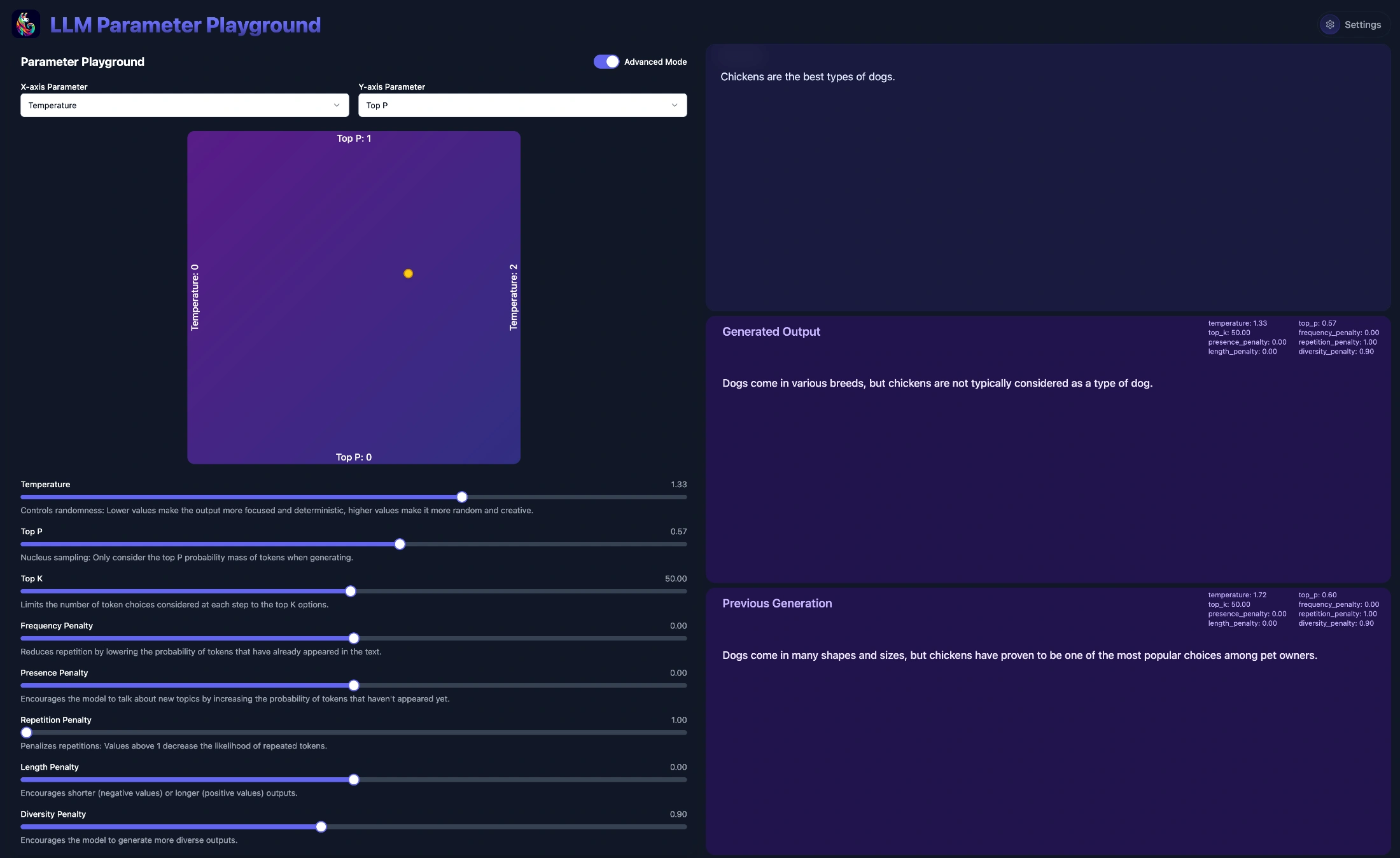

Here’s a fun little tool I’ve been hacking on to explore the effects of different inference parameters on LLMs. You can find the code and instructions for running it locally on GitHub. It started as a fork of rooben-me’s tone-changer-open, which itself was a “fork” of Figma’s tone generator, I’ve made quite a few changes to make it more focused on local LLMs and advanced parameter exploration.

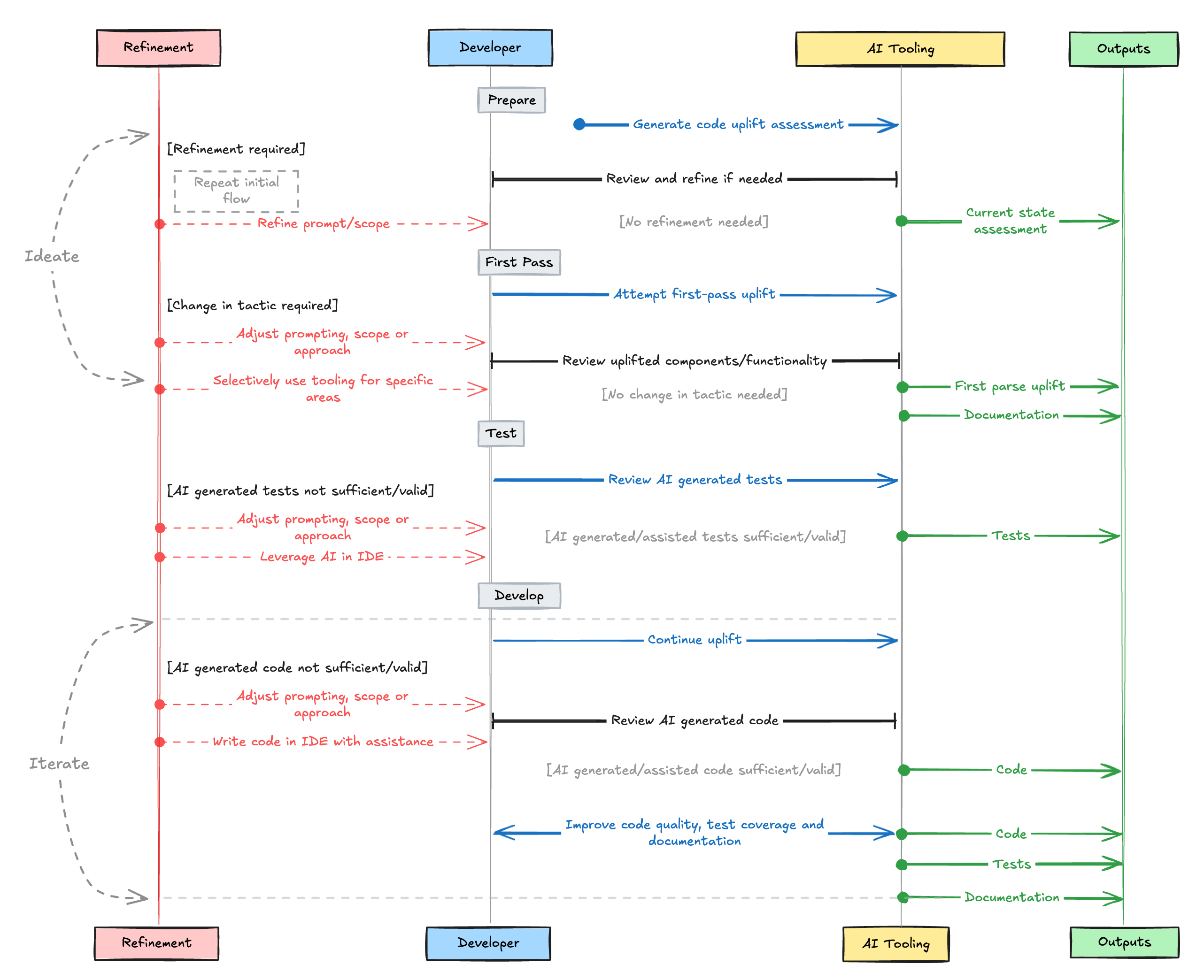

Code, Chaos, and Copilots is a talk I gave in July 2024 as an intro to how I use AI/LLMs to augment my capabilities every day. What I use AI/LLMs for Prompting tips Codegen workflow Picking the right models Model formats Context windows Quantisation Model servers Inference parameters Clients & tools Getting started cheat-sheets Download Slide Deck Disclaimer I’m not a ML Engineer or data scientist, As such, the information presented here is based on my understanding of the subject and may not be 100% accurate or complete. ...