Confuddlement on Github I was tired of manually downloading Confluence pages and converting them to Markdown, so I wrote a small command-line tool designed to simplify this process.

Confuddlement is a Go-based tool that uses the Confluence REST API to fetch page content and convert it to Markdown files.

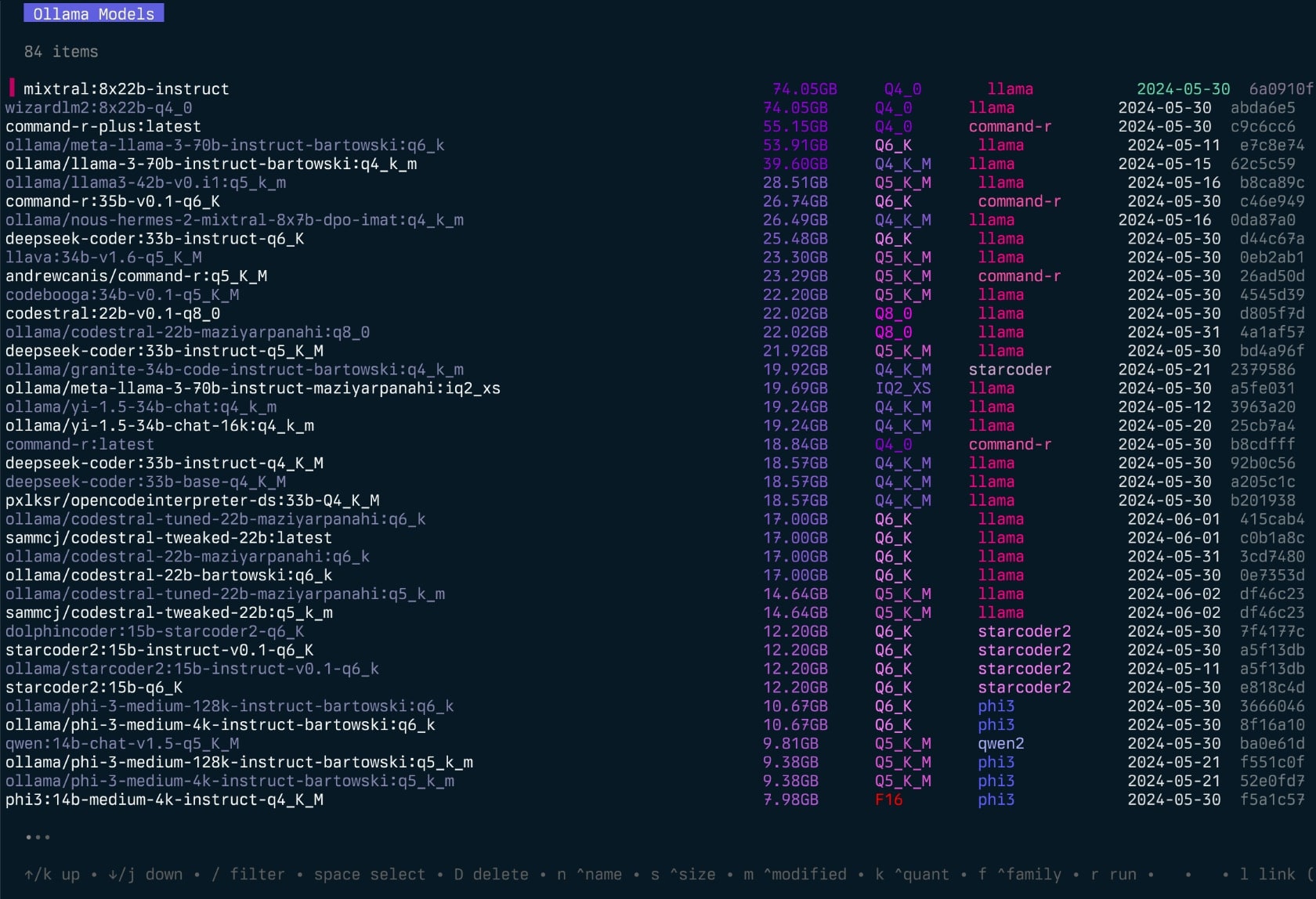

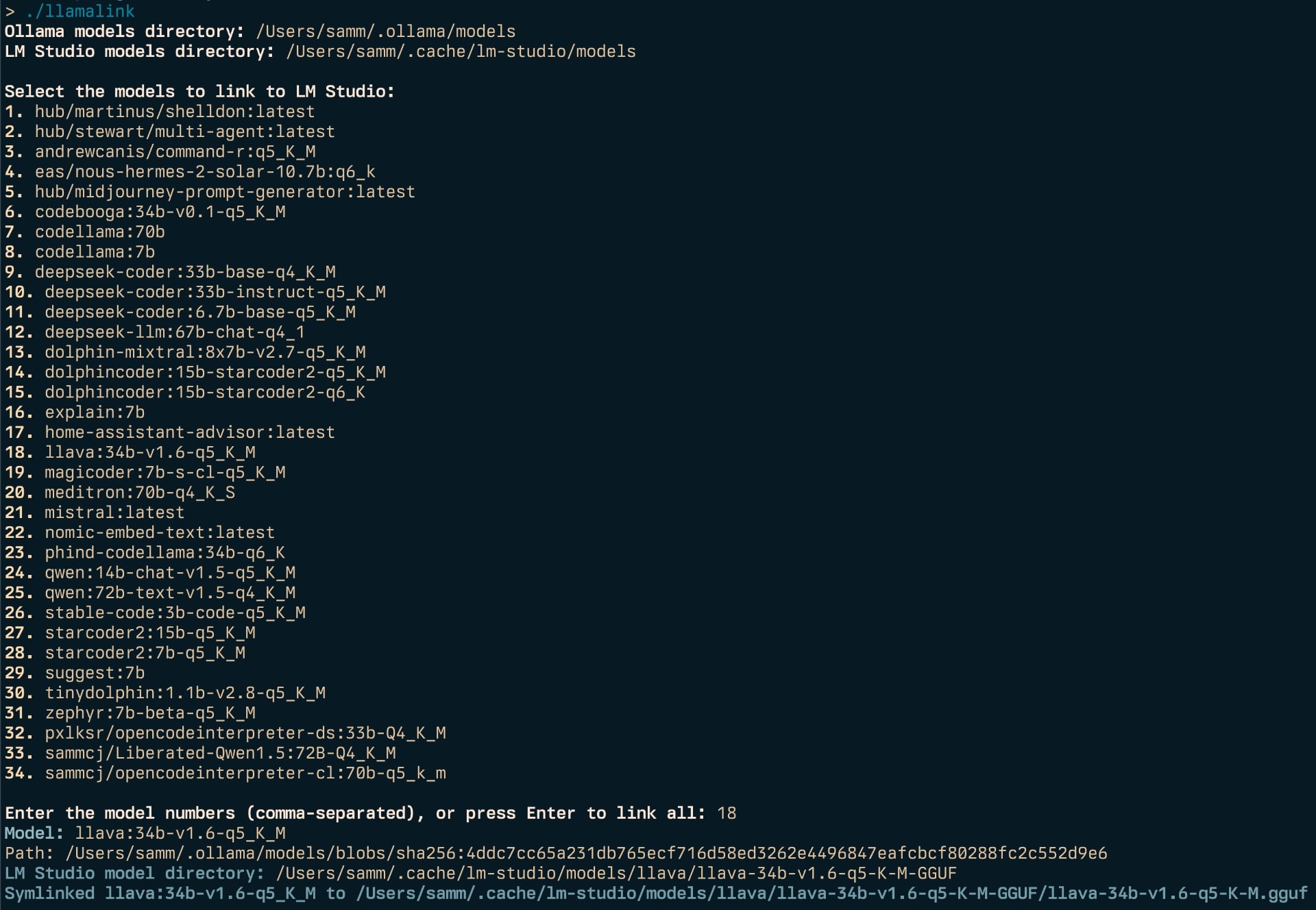

It can fetch pages from multiple spaces, skip pages that have already been fetched, and summarise the content of fetched pages using the Ollama API.

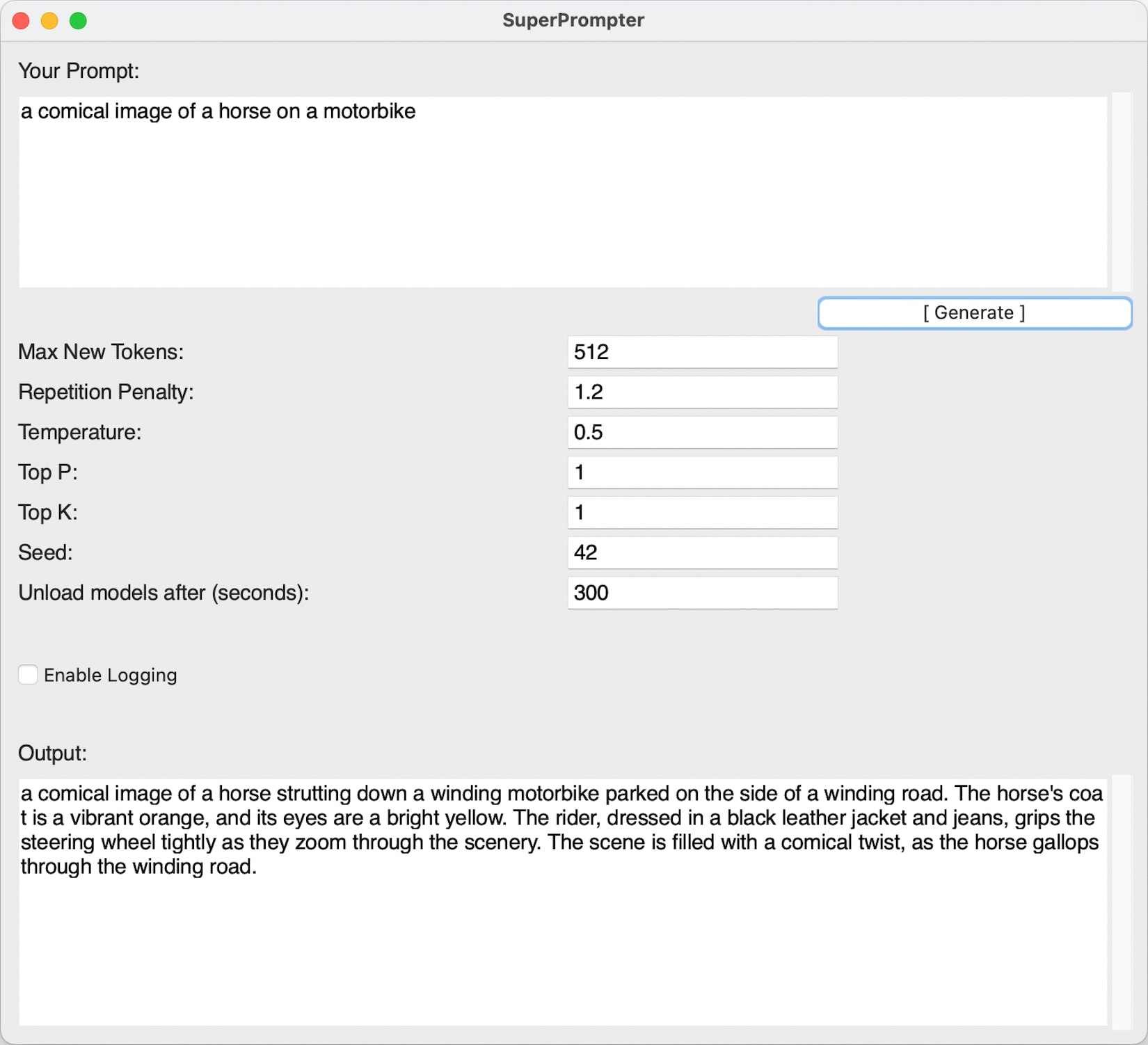

$ go run ./main.go Confuddlement 0.3.0 Spaces: [COOLTEAM, MANAGEMENT] Fetching content from space COOLTEAM COOLTEAM (Totally Cool Team Homepage) Retrospectives Decision log Development Onboarding Saved page COOLTEAM - Feature List to ./confluence_dump/COOLTEAM - Feature List.md Skipping page 7. Support, less than 300 characters MANAGEMENT (Department of Overhead and Bureaucracy) Painful Change Management Illogical Diagrams Saved page ./confluence_dump/Painful Change Management.md Saved page Illogical Diagrams to ./confluence_dump/Ilogical Diagrams.md Done! $ go run ./main.go summarise Select a file to summarise: 0: + COOLTEAM - Feature List 1: + Painful Change Management 2: + Illogical Diagrams Enter the number of the file to summarise: 1 Summarising Painful Change Management... "Change management in the enterprise is painful and slow. It involves many forms and approvals." go run main.go -q 'who is the CEO?' -s 'management' -r 2 Querying the LLM with the prompt 'who is the CEO?'... "The CEO of the company is Peewee Herman." Usage Running the Program Copy .env.template to .env and update the environment variables. Run the program using the command go run main.go or build the program using the command go build and run the resulting executable. The program will fetch Confluence pages and save them as Markdown files in the specified directory. Querying the documents with AI You can summarise the content of a fetched page using the Ollama API by running the program with the summarise argument:

go run main.go summarise To perform a custom query, you can use the query argument:

-q: The query to to provide to the LLM. -s: The search term to match documents against. -r: The number of lines before and after the search term to include in the context to the LLM. go run main.go -q 'who is the CEO?' -s 'management' -r 2 Querying the LLM with the prompt 'who is the CEO?'... "The CEO of the company is Peewee Herman." ...