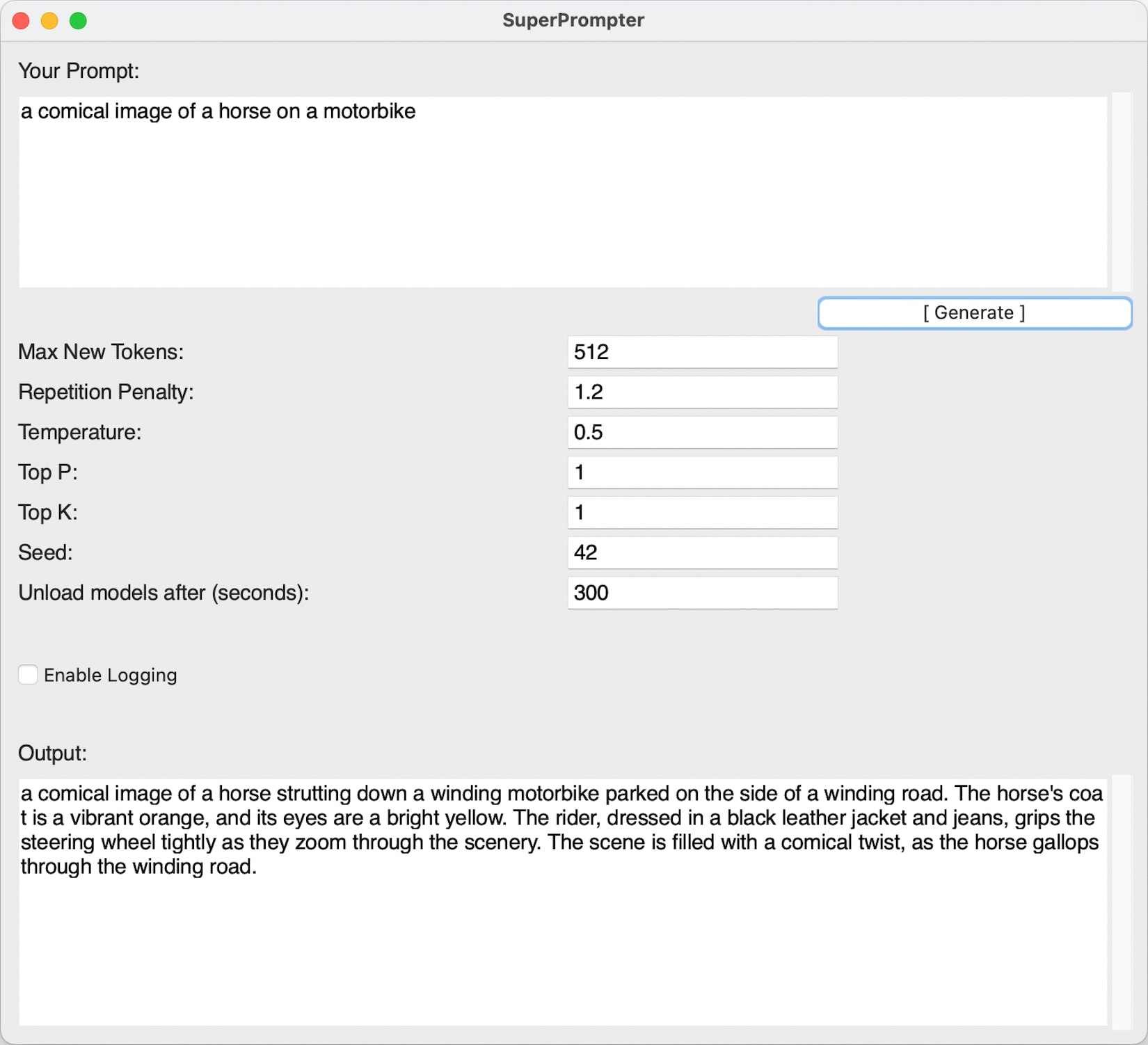

SuperPrompter - Supercharge your text prompts for AI/LLM image generation

SuperPrompter is a Python-based application that utilises the SuperPrompt-v1 model to generate optimised text prompts for AI/LLM image generation (for use with Stable Diffusion etc…) from user prompts.

See Brian Fitzgerald’s Blog for a detailed explanation of the SuperPrompt-v1 model and its capabilities / limitations.

Features

- Utilises the SuperPrompt-v1 model for text generation.

- A basic (aka ugly) graphical user interface built with tkinter.

- Customisable generation parameters (max new tokens, repetition penalty, temperature, top p, top k, seed).

- Optional logging of input parameters and generated outputs.

- Bundling options to include or exclude pre-downloaded model files.

- Unloads the models when the application is idle to free up memory.

Prebuilt Binaries

Check releases page to see if there are any prebuilt binaries available for your platform.

Building

Prerequisites

- Python 3.x

- Required Python packages (listed in

requirements.txt) - python-tk (

brew install python-tk)

Installation

Clone the repository:

git clone https://github.com/yourusername/SuperPrompter.gitNavigate to the project directory:

cd SuperPrompterCreate a virtual environment (optional but recommended):

make venvInstall the required packages:

make install

Usage

Run the application:

make run

- On first run, it will download the SuperPrompt-v1

model files.

- Subsequent runs will use the downloaded model files.

- Once the model is loaded, the main application window will appear.

- Enter your prompt in the “Your Prompt” text area.

- Adjust the generation parameters (max new tokens, repetition penalty, temperature, top p, top k, seed) as desired.

- Click the “Generate” button or press Enter to generate text based on the provided prompt and parameters.

- The generated output will be displayed in the “Output” text area.

- Optionally, enable logging by checking the “Enable Logging” checkbox.

- When enabled, the input parameters and generated outputs will be saved to a log file named

~/.superprompter/superprompter_log.txtin the user’s home directory.

- When enabled, the input parameters and generated outputs will be saved to a log file named

Bundling

SuperPrompter can be bundled into a standalone executable using PyInstaller.

The bundling process is automated with a Makefile and a bundle.py script.

To bundle the application:

Install deps

make venv make installCheck it runs

make runRun the bundling command

make bundleThis command will download the SuperPrompt-v1 model files and bundle the application with the model files included. Alternatively, if you want to bundle the application without including the model files (they will be downloaded at runtime), run:

make bundleWithOutModelsThe bundled executable will be available in the

distdirectory.

Contributing

Contributions are welcome! If you find any issues or have suggestions for improvements, please open an issue or submit a pull request.

License

I have open sourced the codebase under the MIT License and it is freely available for download on GitHub.